What happens when data-driven insights meet human-centered values? And in an age where AI shapes everything we see and believe, how do we ensure communication stays grounded in empathy, ethics, and real human stories?

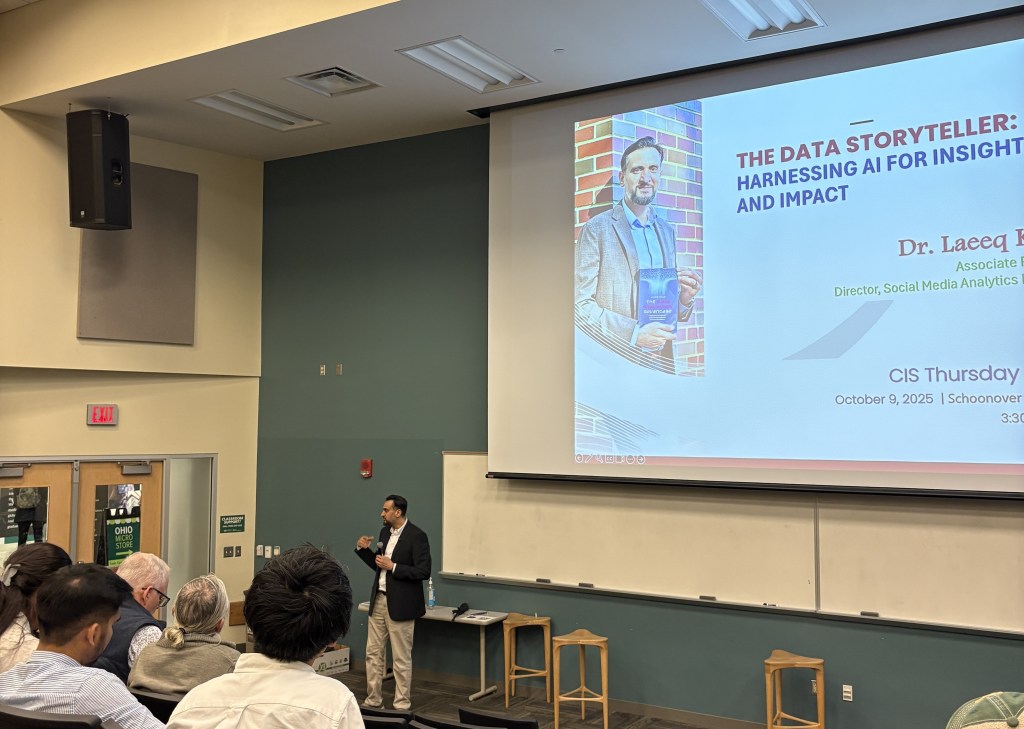

I sat down with Dr. Janice M. Collins on the Spotlight on Research Podcast from Ohio University’s E.W. Scripps School of Journalism to tackle these questions, and explore what it really means to have a “data analytics advantage” in today’s media landscape.

Here’s the truth about analytics in communication: The advantage isn’t just having data. It’s knowing how to interpret it meaningfully and ethically. Data analytics moves us beyond gut feelings and into insight-driven communication. It helps us understand audiences in real time—what they feel, believe, and respond to. But there’s a catch we can’t ignore: behind every data point is a human story. A comment. A click. A sentiment. A person. If we lose sight of that, we risk treating audiences as abstractions—numbers on a dashboard instead of living, breathing communities with needs, fears, and hopes.

This book didn’t start in a boardroom. It started in a classroom and my research lab. For years, I watched students light up with curiosity about analytics, then freeze when faced with spreadsheets and statistical jargon. I saw professionals in consulting struggle to bridge the gap between technical analysis and strategic communication. And I realized something important: the problem wasn’t the data. It was how we talk about it. So I set out to create a bridge: between the technical and the human, between theory and practice, between intimidation and insight.

The book reflects my work across three worlds. Academia gave me the theory and frameworks. Consulting showed me the urgency and messiness of real-world application. Analytics provided the tools to connect them both. The result? A guide that’s rigorous enough for the classroom but practical enough to use on Monday morning.

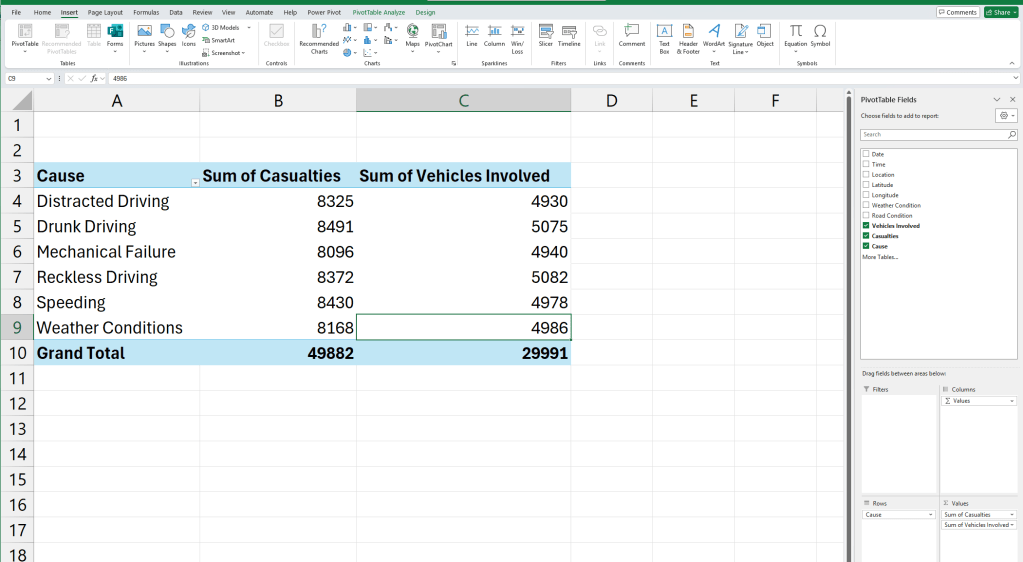

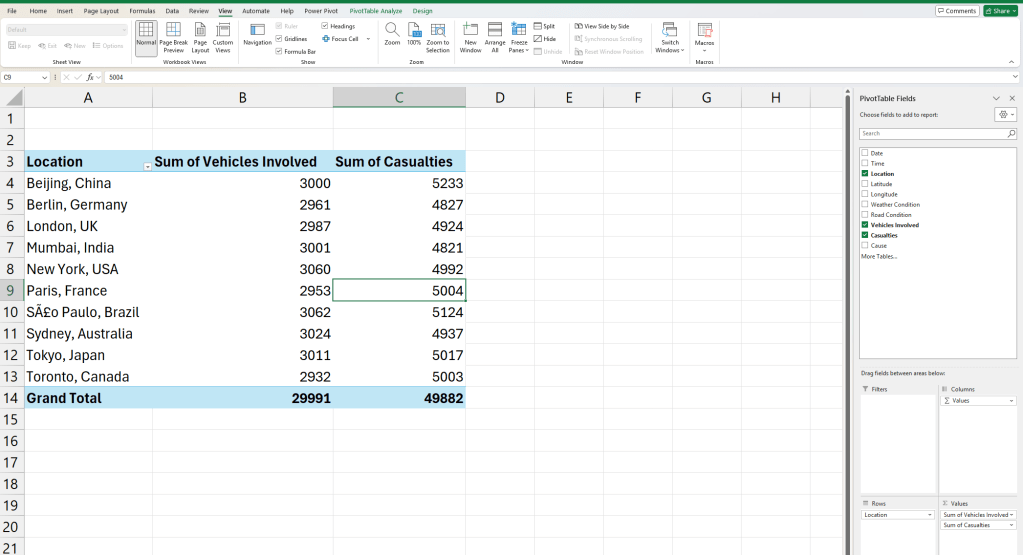

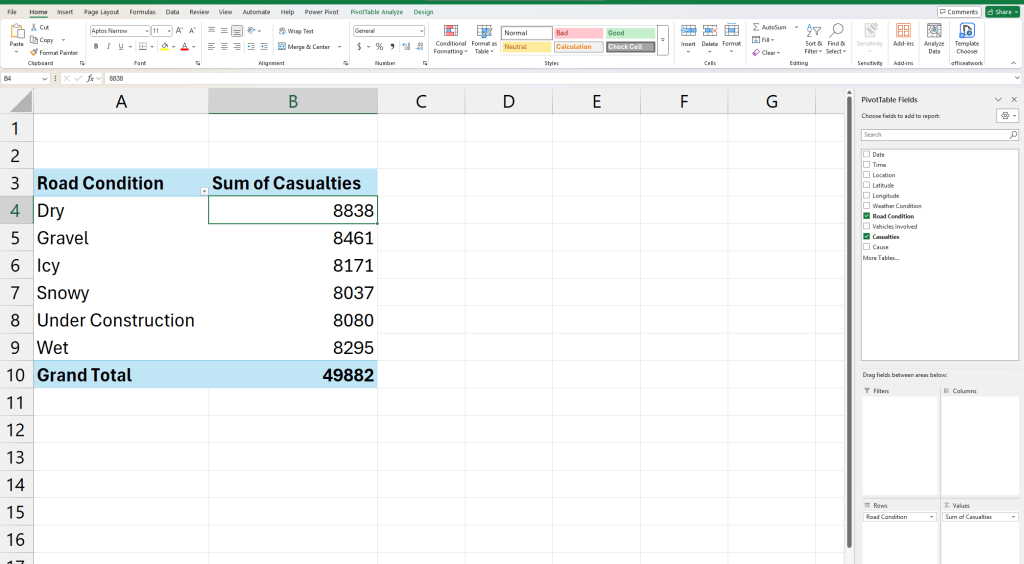

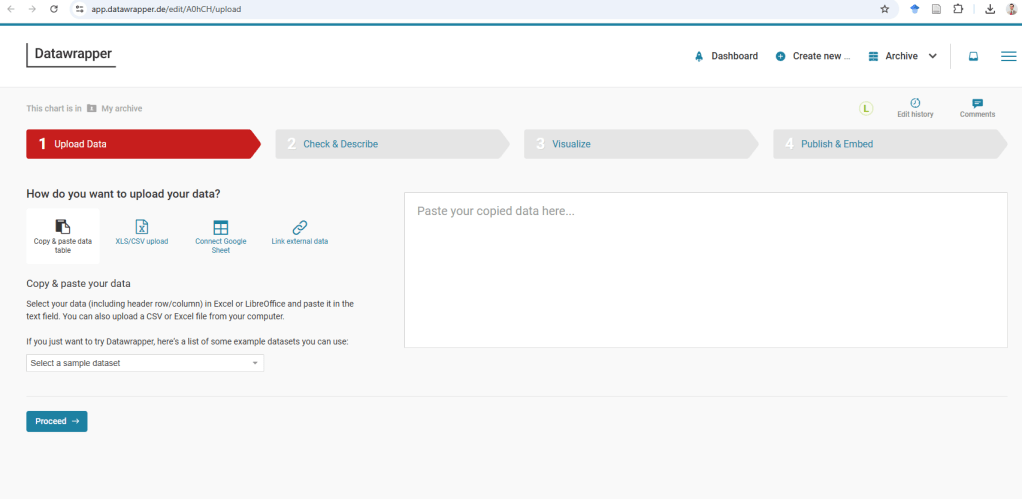

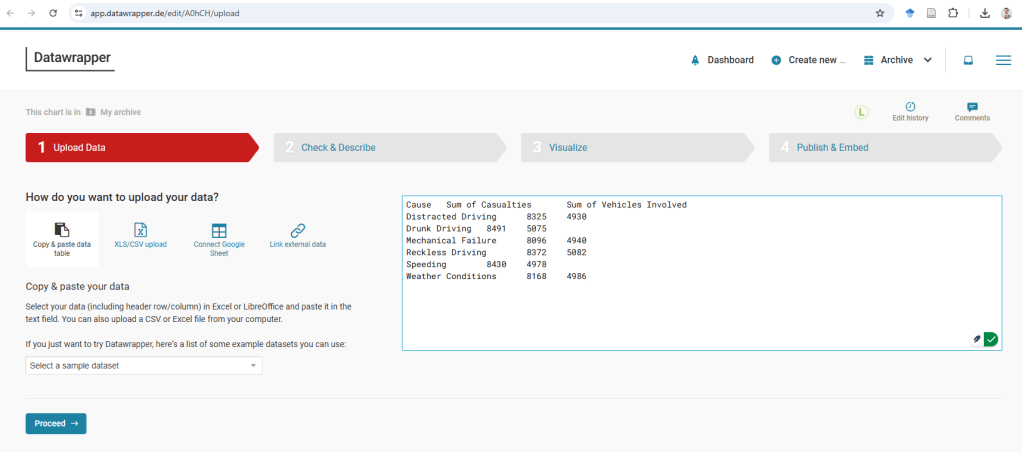

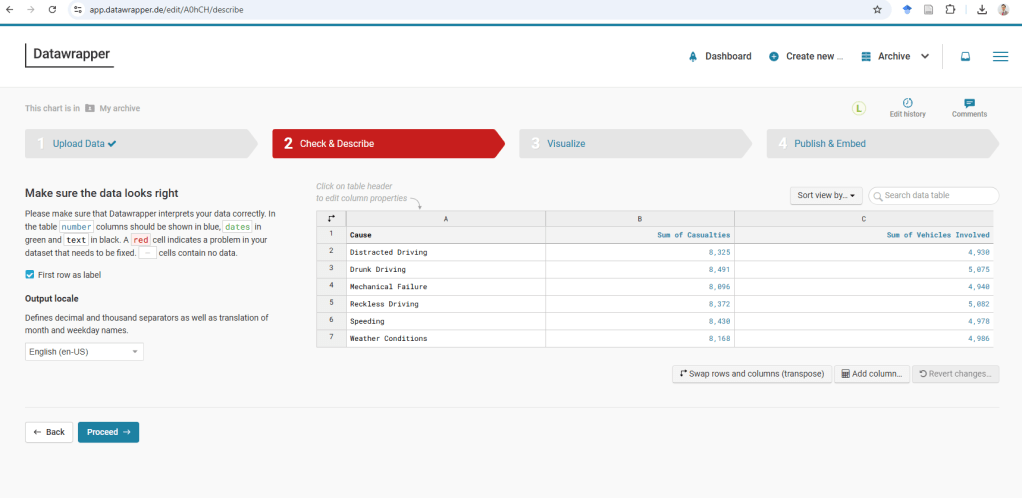

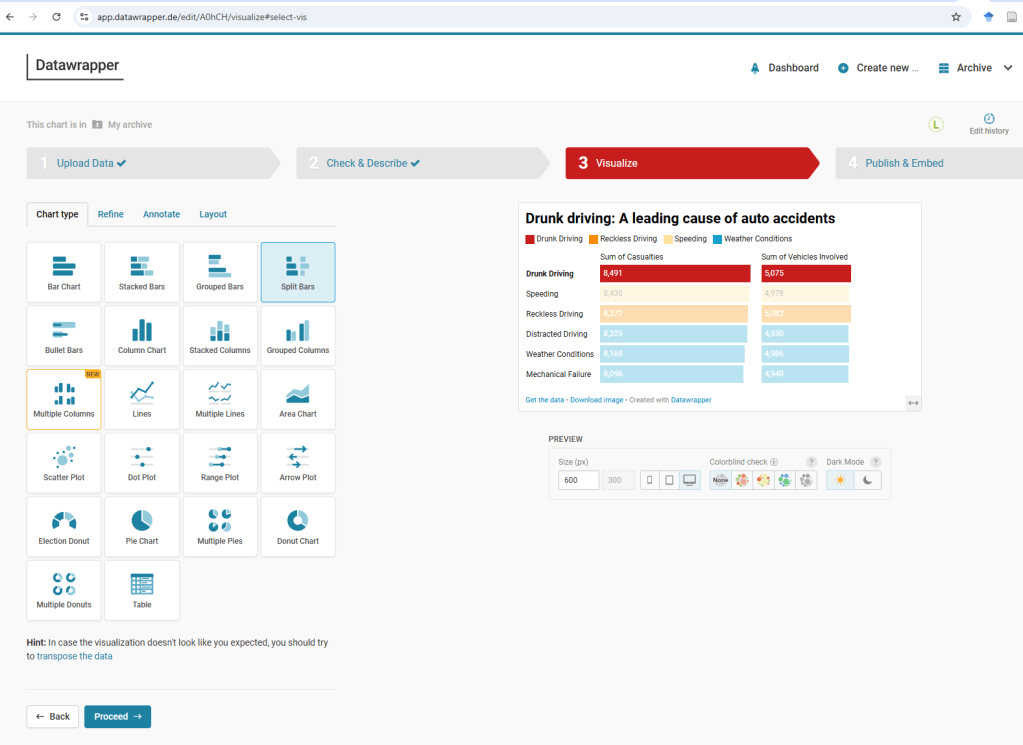

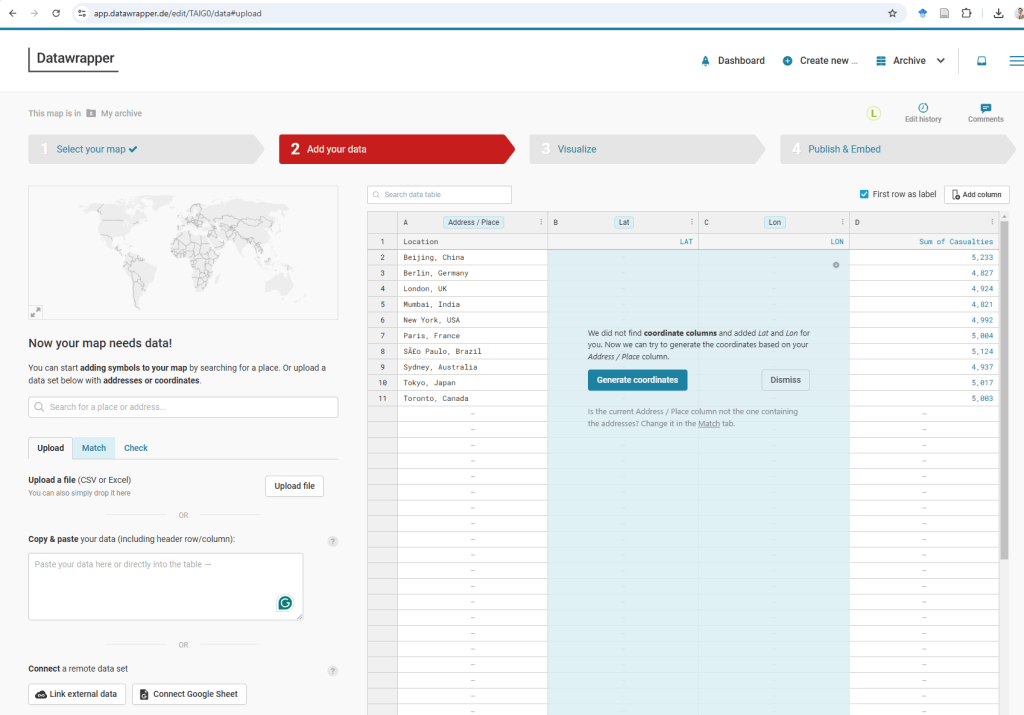

I built the book around what I call the DAV Framework—Discovery, Analysis, and Visualization. It mirrors how we naturally solve problems. Discovery is about starting with curiosity, asking the right questions, and finding reliable data. Analysis means exploring patterns and relationships to make sense of what you’ve found. Visualization transforms those insights into stories people can understand and act on. Think of it as a journey: from finding data in the wild to crafting a narrative that moves people to action. It’s not about being a “data person”—it’s about being a curious person who wants to understand the world more deeply.

Let me guess: When you hear “data analytics,” you think of complex formulas, intimidating software, and people who speak in statistical significance. I get it. That’s exactly why I wrote this book differently. Instead of starting with theory, I start with stories—real-world examples from social media, marketing campaigns, and everyday communication. The focus is on interpretation over formulas, on building confidence and curiosity instead of fear. Complex ideas are broken into manageable steps. I show you easy-to-use tools that work right away. Math anxiety? Left at the door. You don’t need to be a “data person” to do this work. You just need to care about understanding people better.

Here’s where things get interesting. AI has made analytics faster, more accessible, and frankly, a bit magical. It can summarize thousands of social media posts in seconds. It can visualize trends you’d never spot manually. It can predict patterns before they fully emerge. But here’s what AI can’t do: make ethical judgments, understand cultural context, replace human empathy, or decide what’s fair. The real power lies in collaboration—humans guiding AI with critical thinking, ethical reasoning, and contextual understanding. We’re not becoming more dependent on machines. We’re learning to be better collaborators with them. And that requires us to stay sharp, stay ethical, and stay human.

In our conversation, Dr. Collins and I dug into why you need both numbers and stories. Quantitative data tells us what is happening. Qualitative data tells us why. Imagine you’re analyzing a campaign and the numbers show engagement dropping off a cliff. Alarming, right? But why is it dropping? Are people offended by the messaging? Did the algorithm change? Is there a competitor campaign pulling attention? Did your audience simply move on to a new platform? A chart can’t tell you that. But interviews, open-ended survey responses, and thematic analysis can. When you combine both approaches, you capture the full picture: emotion, meaning, and behavior. In communication work, that synthesis isn’t optional, it’s essential.

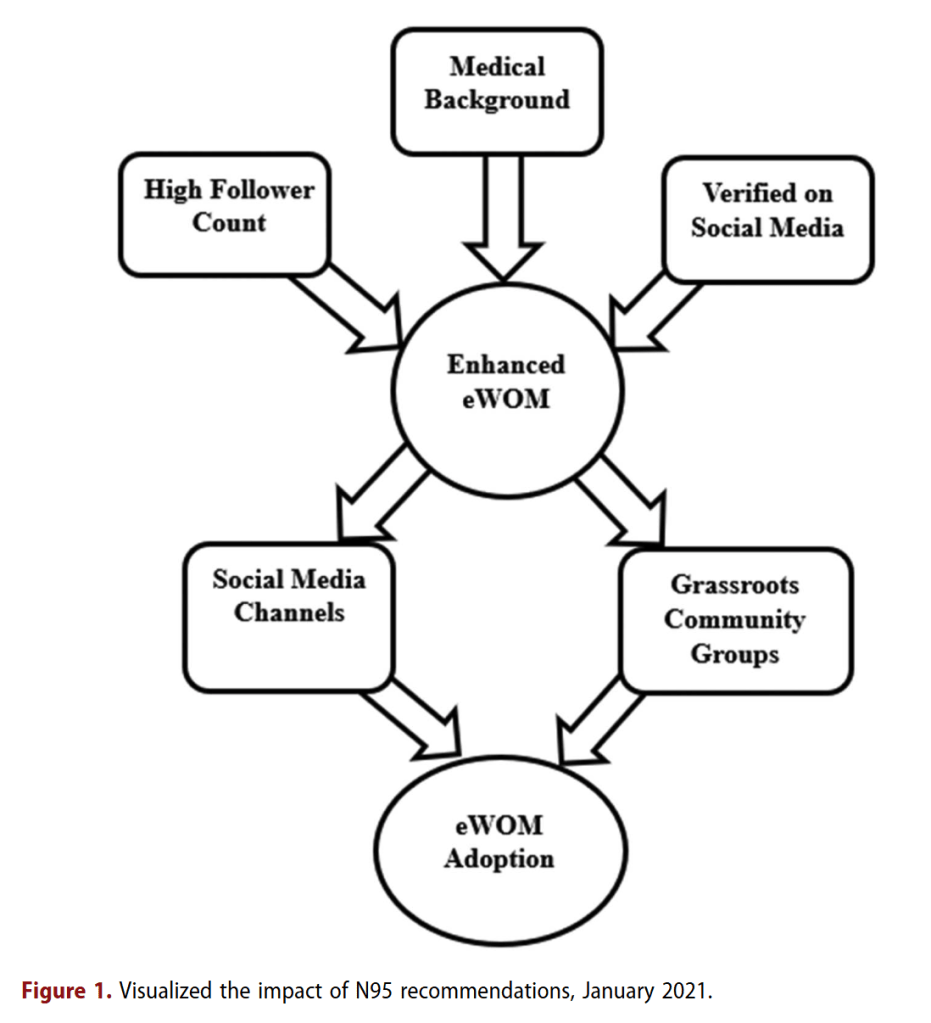

Which brings us to the ethical question we can’t ignore: Who’s included in our datasets—and who’s left out? Too often, marginalized voices are missing from the data that shapes media narratives, policy decisions, and business strategies. Algorithms trained on incomplete data perpetuate bias. Privacy gets sacrificed for convenience. Representation becomes an afterthought. Ethical analytics means being intentional about inclusion—whose voices are we hearing and whose are we missing? It means protecting privacy rather than exploiting it. It means asking whether our analysis reinforces inequity or challenges it. Data should empower, not exclude. If we’re not asking these questions, we’re not doing analytics responsibly.

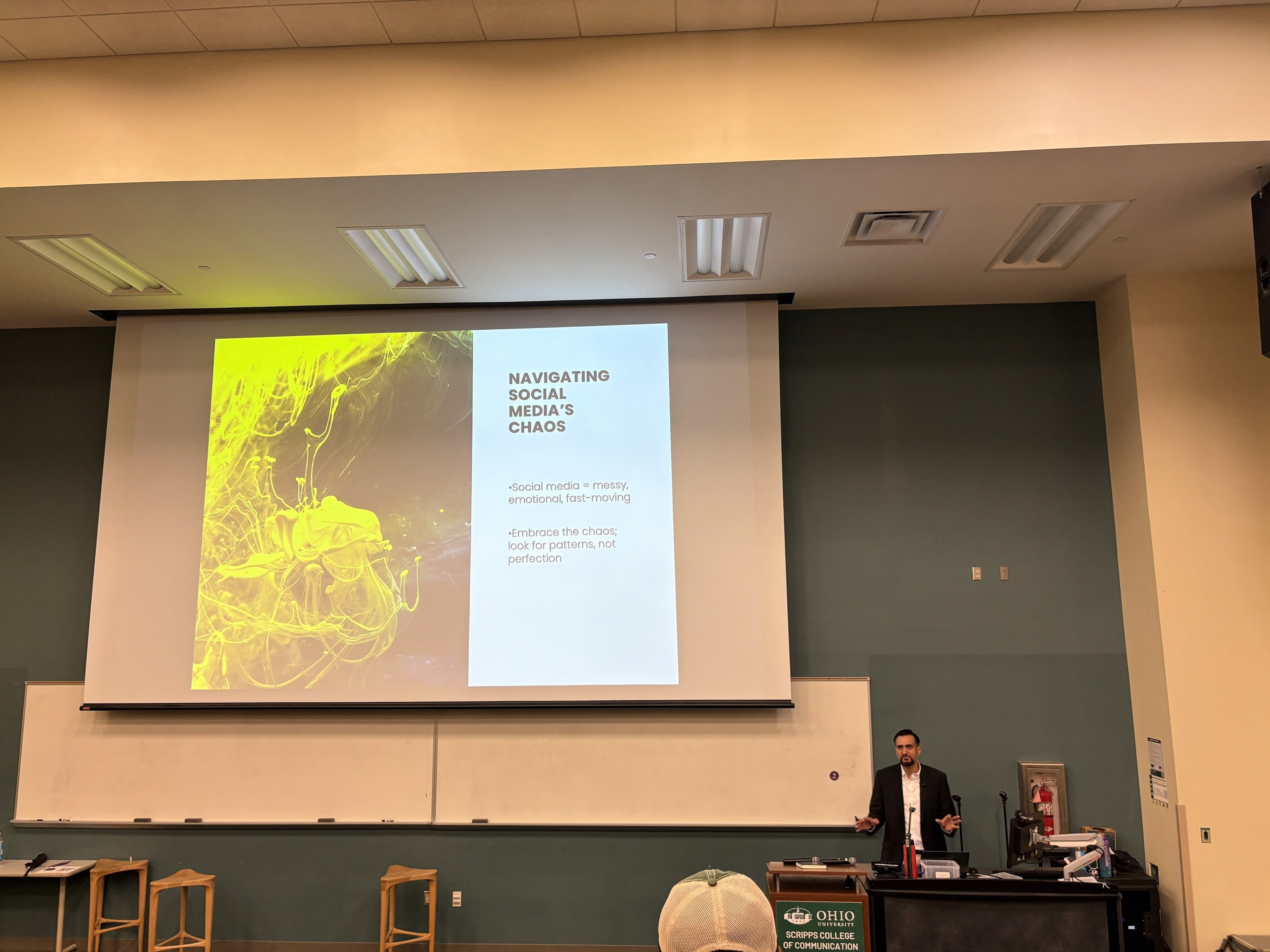

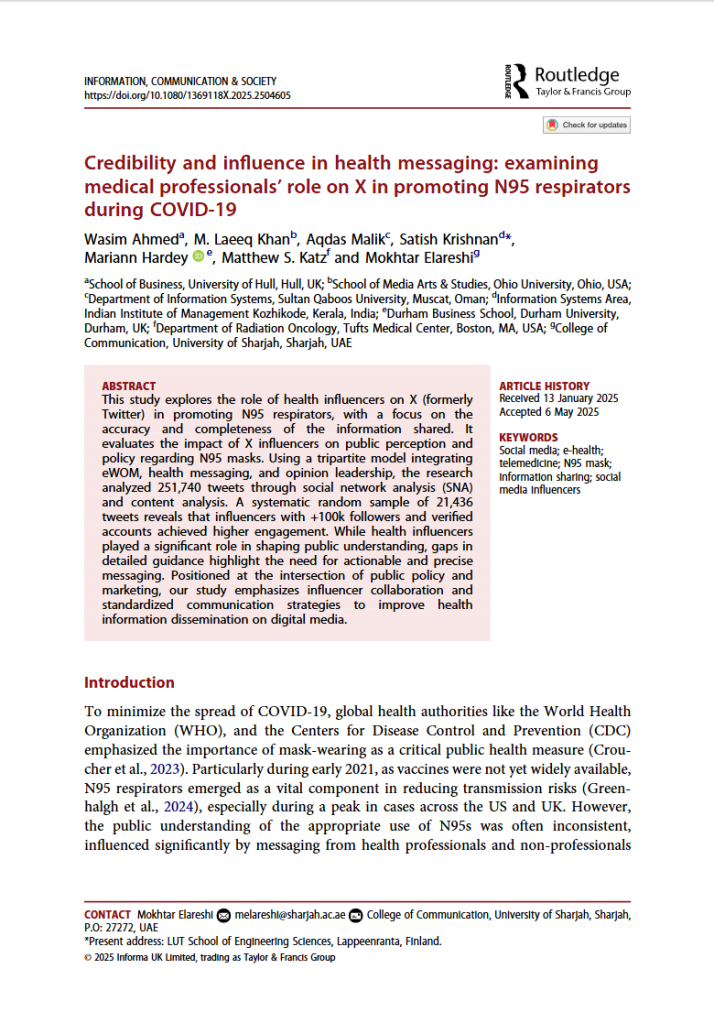

Social media data is chaotic, emotional, fast-moving, and contradictory. And that’s exactly what makes it so valuable. My advice? Embrace the mess. Use frameworks that help you organize chaos into themes. Focus on patterns rather than noise. And always—always—interpret data within its cultural and social context. A hashtag can be empowering or satirical depending on who’s using it. An emoji can signal joy or sarcasm. A trending topic can mean completely different things in different communities. Good analysts listen before they label.

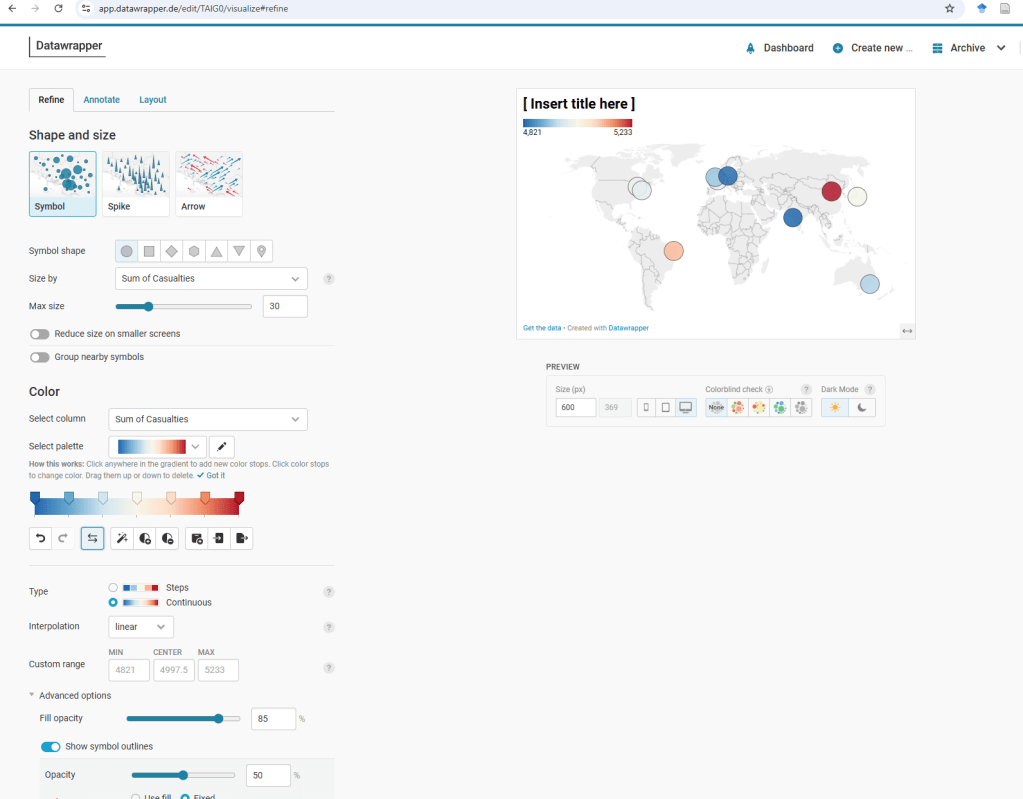

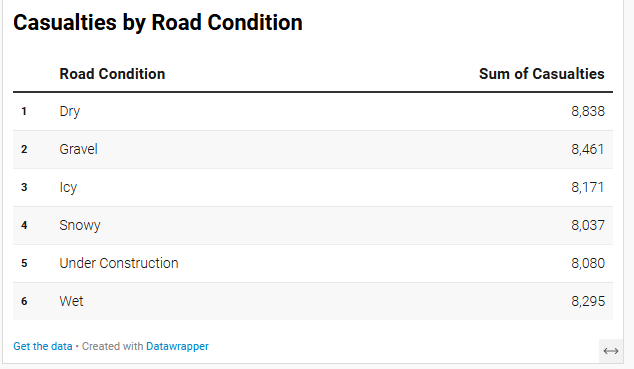

Most people don’t care about your data. They care about what it means. A good data story isn’t about showing more charts—it’s about showing the right ones that answer meaningful questions. The best stories simplify complexity without dumbing it down. They speak to both head and heart—logic and emotion. They make audiences remember and inspire them to act. Before you create any visualization, ask yourself: “What do I want my audience to do after seeing this?” That single question will transform how you communicate insights.

Here’s my bold prediction: The next generation of communicators and business leaders won’t see analytics as a technical skill. They’ll see it as a form of literacy—as essential as reading, writing, and critical thinking. They won’t use data just to measure. They’ll use it to understand. They’ll blend AI tools with human insight to build communication systems that are more transparent, more empathetic, and more responsive to real human needs. In that world, data analytics isn’t just an advantage. It’s a responsibility.

Dr. Collins asked the kinds of questions that made me think deeply about ethics, about empathy, about what communicators we want to become. Watch the full conversation and join us in exploring how to harness the power of data and AI while keeping human values at the center of everything we do.

Questions? Thoughts? Let’s talk. Drop a comment below or connect with me—because the future of communication isn’t just about smarter algorithms. It’s about wiser humans using them.

Amazon:

Amazon: