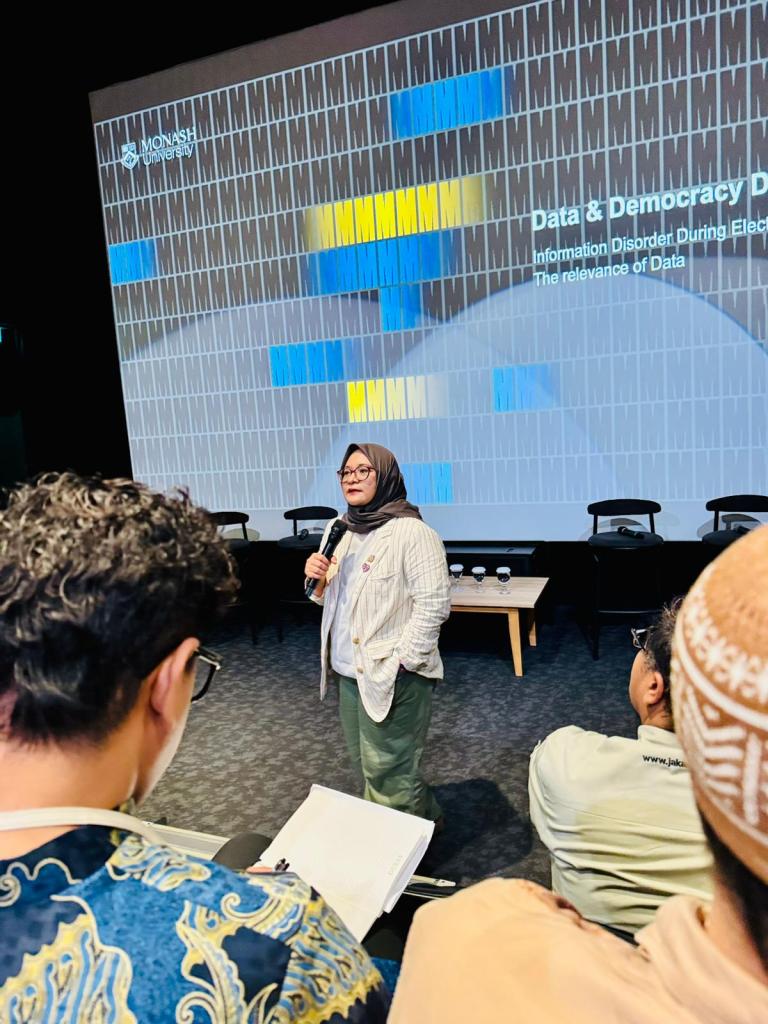

I recently had the incredible honor of delivering the keynote presentation at an event organized by the Monash Data and Democracy Research Hub (MDDRH) at Monash University Indonesia.

As someone deeply invested in the intersection of social media, technology, and audience engagement, this invitation was both humbling and inspiring. The event was organized by Dr. Ika Idris, Co-Director and Associate Professor of Public Policy and Management, and her team at. Monash. The event provided an unparalleled platform to engage with a diverse and dynamic audience on a topic of growing global importance: how democratic principles can guide algorithm design to foster trust, inclusivity, and meaningful engagement. It was particularly meaningful as Dr. Idris is a proud alumna of Ohio University and an active member of the external research team of the SMART Lab, which I have the privilege of directing in the Scripps College of Communication.

The event provided an unparalleled platform to engage with a diverse and dynamic audience on a topic of growing global importance: how democratic principles can guide algorithm design to foster trust, inclusivity, and meaningful engagement.

About the Monash Data and Democracy Research Hub

The MDDRH at Monash University Indonesia is a groundbreaking interdisciplinary initiative. Bringing together expertise in data science, cybersecurity, social and political sciences, public policy, and business, the hub serves as a focal point for understanding the critical role of data in shaping democracy in the digital age. Its mission is ambitious yet essential: to foster data-driven research, boost digital literacy, advance digital democracy, and help shape policies for a more informed and resilient society.

Being invited to speak at an event hosted by such a forward-thinking institution was an honor and a privilege. The MDDRH’s commitment to fostering ethical, responsible, and inclusive data practices aligns closely with my research and professional passions, making this an ideal platform to share insights and strategies for building a better digital future.

Keynote Highlights: Rethinking Social Media Algorithms

My presentation focused on the urgent need to rethink the algorithms driving social media platforms, which are often optimized solely for engagement metrics like clicks, shares, and time spent online. While effective at capturing attention, these algorithms can unintentionally amplify misinformation, sensationalism, and societal polarization—undermining the democratic values they should be supporting.

Key Themes of the Presentation:

- The Role of Algorithms in Public Discourse

I discussed how algorithms influence what we see, share, and believe online, and the profound implications of these mechanisms for democratic processes. - Challenges of Engagement-Driven Models

I highlighted the unintended consequences of current algorithmic models, including the spread of misinformation, the formation of echo chambers, and the erosion of public trust in digital platforms. - Integrating Democratic Values in Algorithm Design

Drawing from democratic theory, I proposed a shift toward “democratic algorithms” that prioritize inclusivity, transparency, and civic value over pure engagement metrics. - Real-World Examples and Actionable Strategies

The presentation featured practical steps for technologists, policymakers, and platform users to collaborate on ethical algorithm design. These strategies included participatory governance frameworks, regular bias audits, and initiatives to promote media literacy.

Audience

The audience was as diverse as the hub itself, comprising academics, government officials, policymakers, technologists, and civil society advocates. Their questions, perspectives, and enthusiasm demonstrated the shared commitment to creating a more equitable, informed, and inclusive digital landscape.

A Fulfilling Experience

Delivering this keynote was not only a professional highlight but also a deeply personal and fulfilling experience. I have always enjoyed delivering workshops and presentations because of the human connections they foster. This particular event allowed me to bring my expertise from Ohio University in the USA to a global stage, engaging with professionals and scholars who are at the forefront of addressing these challenges.

What made this experience especially meaningful was the alignment between my research and the practical needs of those working to strengthen democracy in the digital age, in addition to bringing to light the antecendets of trust in the domain of audience engagement. It is always amazing to see how academic insights can inform real-world strategies to tackle pressing societal issues.

Gratitude and Reflection

I am profoundly grateful to the Monash Data and Democracy Research Hub for the gracious invitation and the opportunity to be part of their mission to advance digital democracy. Their dedication to fostering interdisciplinary dialogue and driving innovative solutions is nothing short of inspiring.

This keynote was more than just a presentation—it was a call to action. As the digital age continues to reshape public discourse, the responsibility to ensure that technology serves the common good becomes ever more critical. I look forward to continuing this important conversation and contributing to the shared goal of building resilient digital democracies worldwide.

Thank you to Monash University Indonesia and the MDDRH team for making this experience so memorable and impactful!